Pipeline Builder: Visually Orchestrate Data Transformations

The Arkham Pipeline Builder provides a visual, canvas-based environment for orchestrating complex data transformations. It is designed for builders to sanitize, consolidate, and transform data from raw, staging datasets into production-ready assets for analytics and AI.

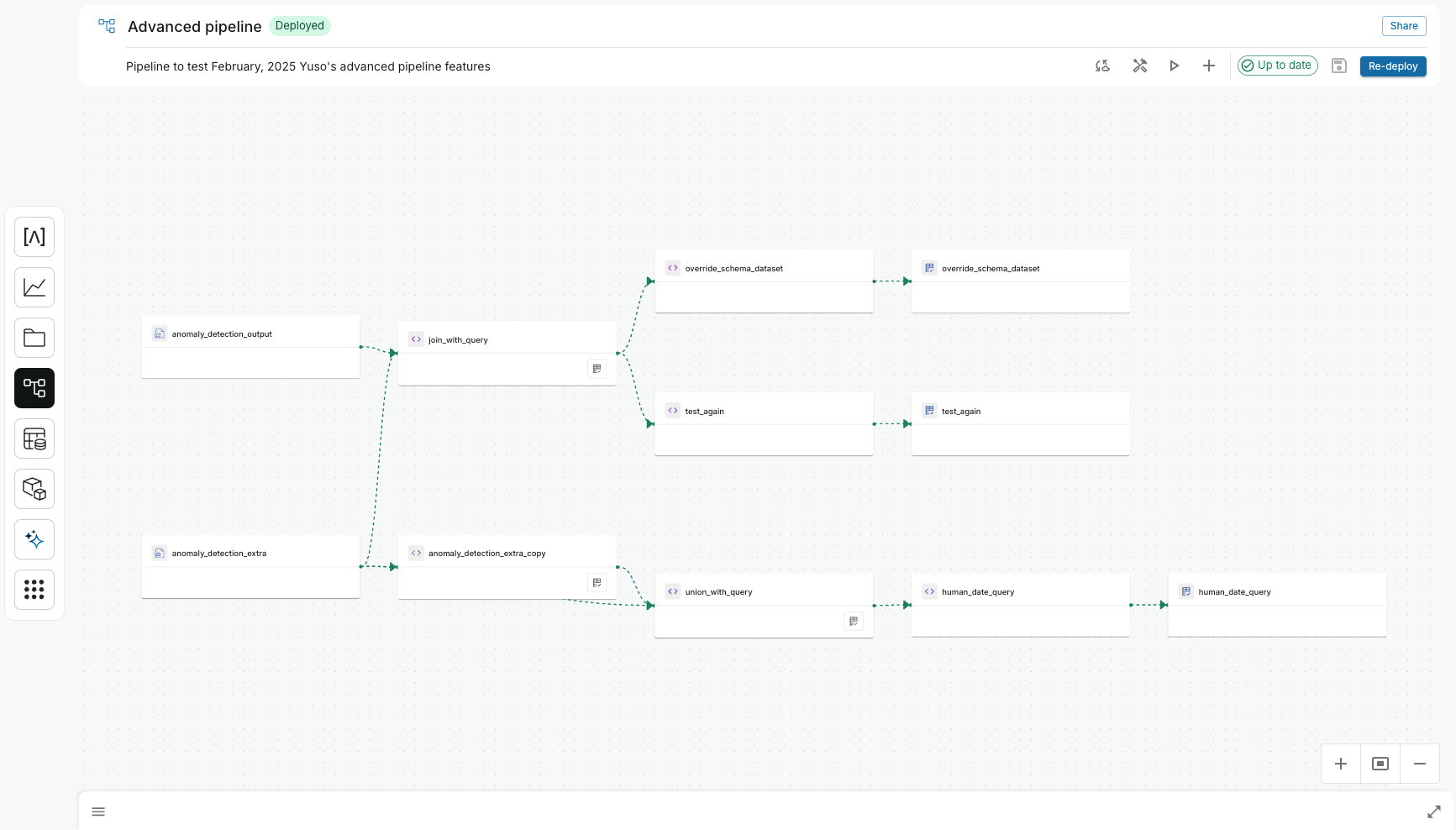

Instead of writing complex imperative scripts, you build pipelines by connecting dataset nodes and applying powerful transformations like joins, unions, and custom SQL queries in an interactive graph.

How It Works: A Node-Based Workflow

The Pipeline Builder represents your entire transformation logic as a directed acyclic graph (DAG). Each node in the graph is a dataset, and each connection represents a transformation that creates a new dataset from its parents.

The development lifecycle is visual and interactive:

- Add Datasets: You start by adding existing datasets from the Data Catalog onto the canvas. These are your source nodes.

- Apply Transformations: Select source nodes and choose a transformation. You can use a UI-driven helper for common operations like joins and unions or write a custom SQL query in the embedded editor for more complex logic. The editor supports referencing other nodes in the pipeline

- Preview and Iterate: Before committing, you can instantly preview the results of your transformation. The platform uses a session compute cluster to run the query on sample data, allowing you to validate your logic in real-time.

- Save and Version:Once you are satisfied with the preview, you apply the step, which creates a new dataset node on the canvas. Saving the pipeline creates a new, immutable version, ensuring that your work is tracked and reproducible.

- Deploy: When your pipeline is complete, you deploy a specific version. The deployed version can be run manually or set to execute on a recurring schedule, automating your data processing.

Key Technical Benefits

- Accelerated Development: The visual interface and live previews dramatically reduce development time. What used to require cycles of coding, running, and debugging can now be done in minutes.

- Clarity and Lineage: The graph-based view makes even the most complex pipelines easy to understand. Data lineage is explicit and automatically tracked, simplifying debugging and impact analysis.

- Reproducibility and Governance: Every saved change creates a new, immutable version of the pipeline. You can always roll back to a previous version or inspect the exact logic that produced a given dataset, ensuring full auditability.

- Scalability: While the interface is visual, the execution is not. When you deploy a pipeline, Arkham runs it on a scalable, managed compute engine capable of processing terabytes of data efficiently.

Related Capabilities

Understand how the Medallion Architecture fits into the broader platform:

- Data Platform Overview

- Medallion Architecture: The Pipeline Builder is the primary tool for moving data from Bronze to Silver and Gold layers.

- Data Lakehouse: The source and destination for all datasets in the Pipeline Builder.

- Data Connectors: The upstream process that ingests the raw data used in pipelines.