Data Connectors: Automate Data Ingestion

The first mile of any data project is often the hardest. Building and maintaining custom ingestion scripts is a resource-intensive bottleneck that consumes engineering hours and delays critical projects. When a source API changes, these fragile pipelines break, eroding trust in your data.

Our Data Connectors are engineered to solve this problem. We provide a library of pre-built, production-grade integrations that automate data loading from any source system directly into our Lakehouse. Instead of writing code, your team uses a simple UI to build reliable data syncs in minutes, not weeks, freeing them to focus on creating value, not managing infrastructure.

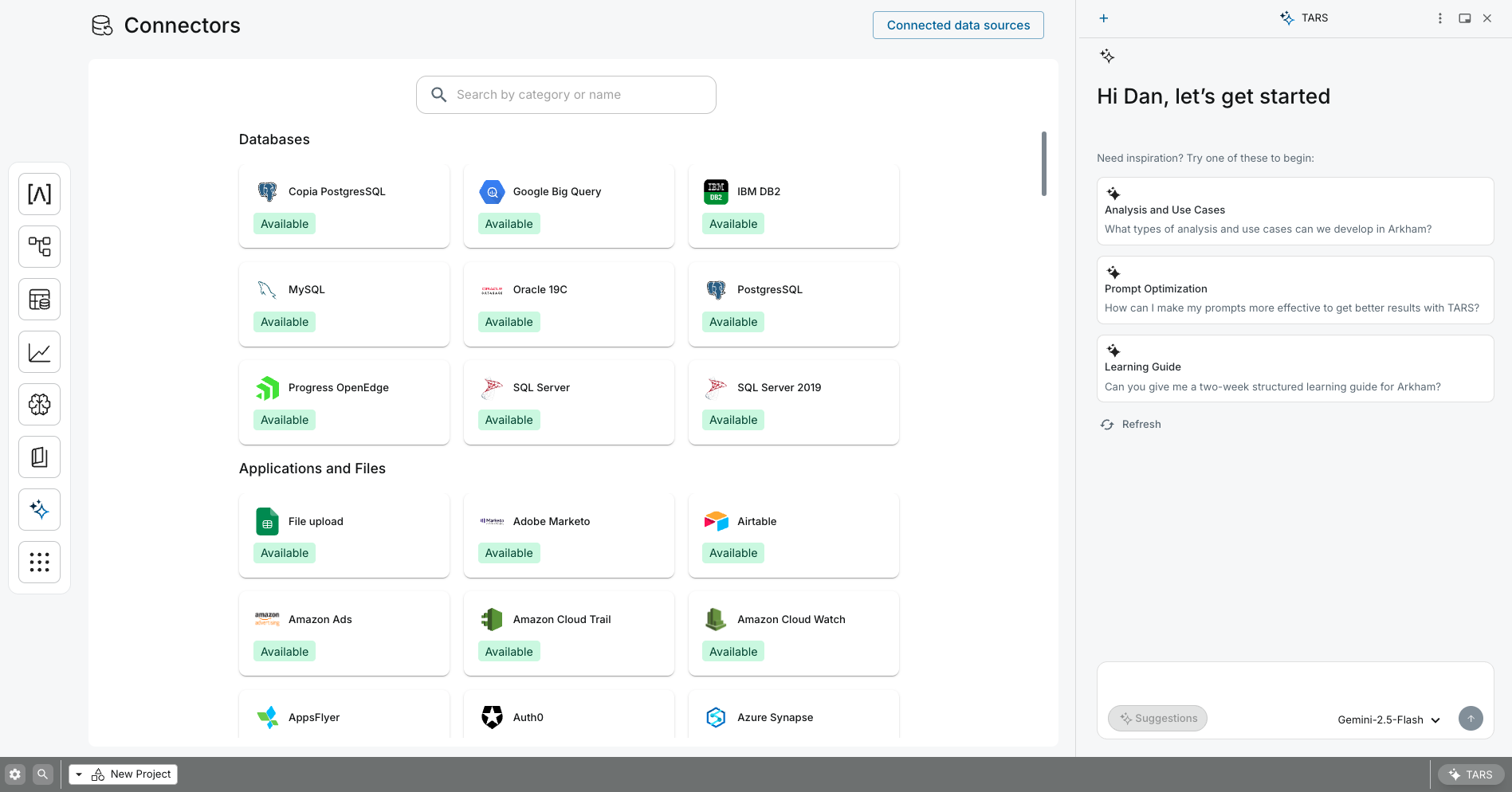

Our Connectors gallery in Arkham, where builders can choose from a library of pre-built integrations to automate data ingestion without writing code.

How It Works: From Source to Staging

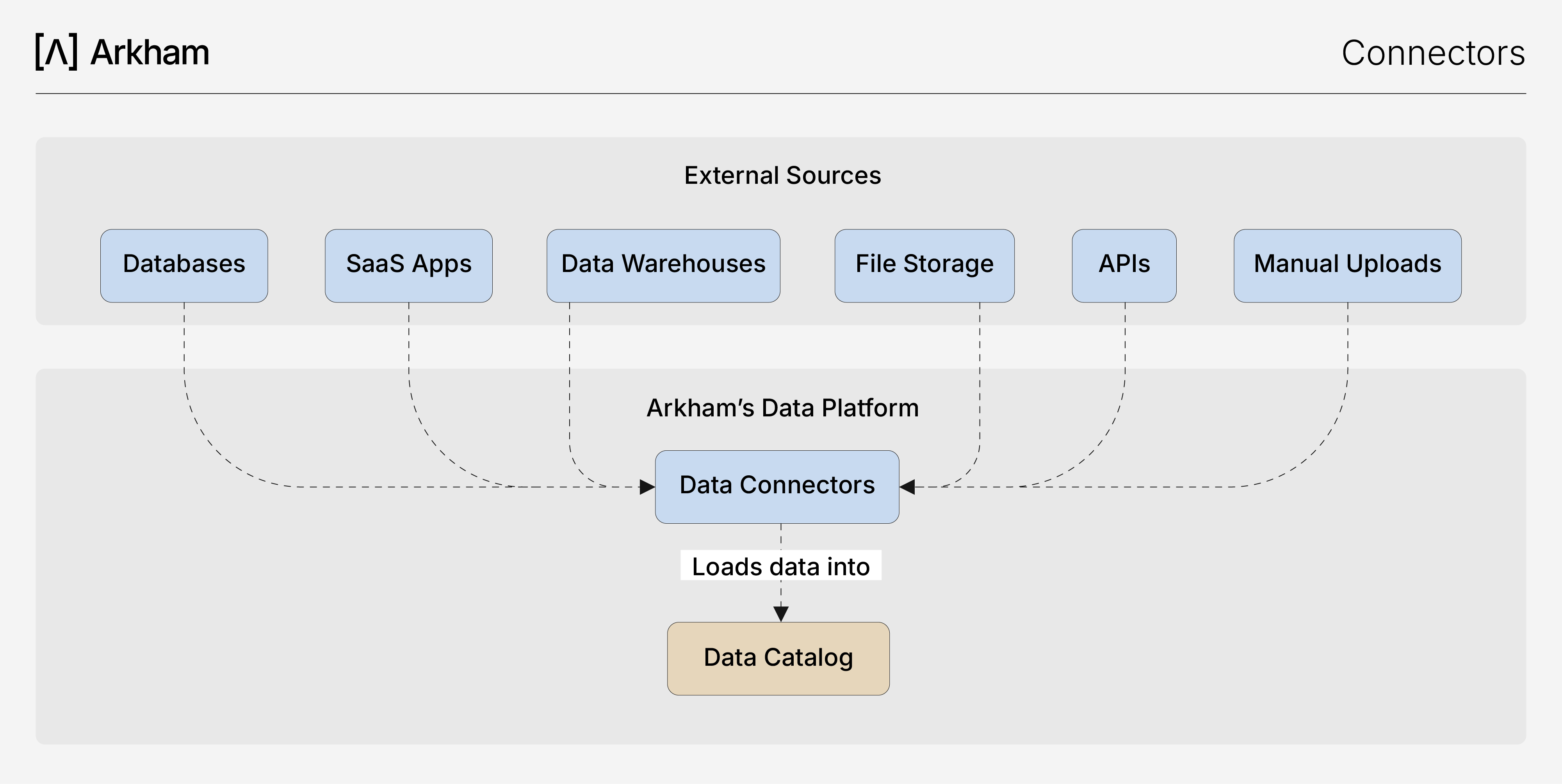

Our Data Connectors streamline the entire ingestion process through a low-code UI. This architecture ensures that data lands in the Staging Tier of our Data Catalog reliably and on schedule, ready for transformation.

This diagram shows the first step in our data workflow, where our Connectors automate the ingestion of data from any external source directly into the Staging Tier of our Data Catalog.

The process is straightforward, and can be driven through a low-code UI or accelerated with TARS.

- Select a Connector: Choose from a wide range of sources in our UI.

- Configure Credentials: Securely provide access credentials through our integrated vault.

- Define Sync Behavior: Select the tables or objects to sync and define the schedule (e.g., batch or incremental).

- Monitor & Manage: Track sync jobs, view logs, and manage connections from a centralized control panel.

🤖 AI-Assisted Ingestion with TARS

You can also perform these actions conversationally using TARS. Instead of navigating the UI, you can simply ask:

"How to create a job to extract the @orders table from my PostgreSQL source and run it every hour."Key Technical Benefits

- Accelerated Development: Move from source to raw data in minutes. By leveraging our pre-built library, your team can focus on data transformation and value creation instead of building and maintaining brittle ingestion scripts.

- Managed & Scalable Infrastructure: Arkham manages the connectors, ensuring they are always up-to-date with source API changes. The service scales automatically to handle terabytes of data without manual intervention.

- Automated Schema Management: Our platform automatically detects schema changes in your source data. For evolving sources, you can enable the "Seamless Schema Sync" option on your sync job to seamlessly propagate these changes to your Staging Dataset, preventing pipeline failures.

- Centralized Control & Governance: Manage all source credentials and data sync schedules in one place. This unified approach simplifies security, ensures compliance, and provides clear visibility into data lineage from the very beginning.

Supported Sources

Our library is continuously expanding. Key categories include:

- Relational Databases: PostgreSQL, MySQL

- SaaS Applications: Salesforce, SAP, Workday

- Data Warehouses: BigQuery, Redshift, Snowflake

- File Storage: Amazon S3, Azure Blob Storage, Google Cloud Storage

- API: Google Analytics, Instagram, Custom REST API

- Manual Uploads: Excel, CSV

Related Capabilities

- Data Platform Overview: See how Connectors fit into the end-to-end data workflow.

- Data Catalog: Our central registry where your newly created Staging Datasets are automatically discovered and managed.

- Pipeline Builder: The logical next step to clean, join, and transform the raw data you've ingested.

- TARS: Your AI co-pilot for accelerating the setup and management of your data syncs.