ML Hub: A Notebook Environment for Production ML

The journey from a promising model in a Jupyter notebook to a governed, production-ready asset is where most machine learning projects fail. Code is rewritten, data lineage is lost, and deployment becomes a complex, manual process. This friction prevents organizations from realizing the full value of their ML investments.

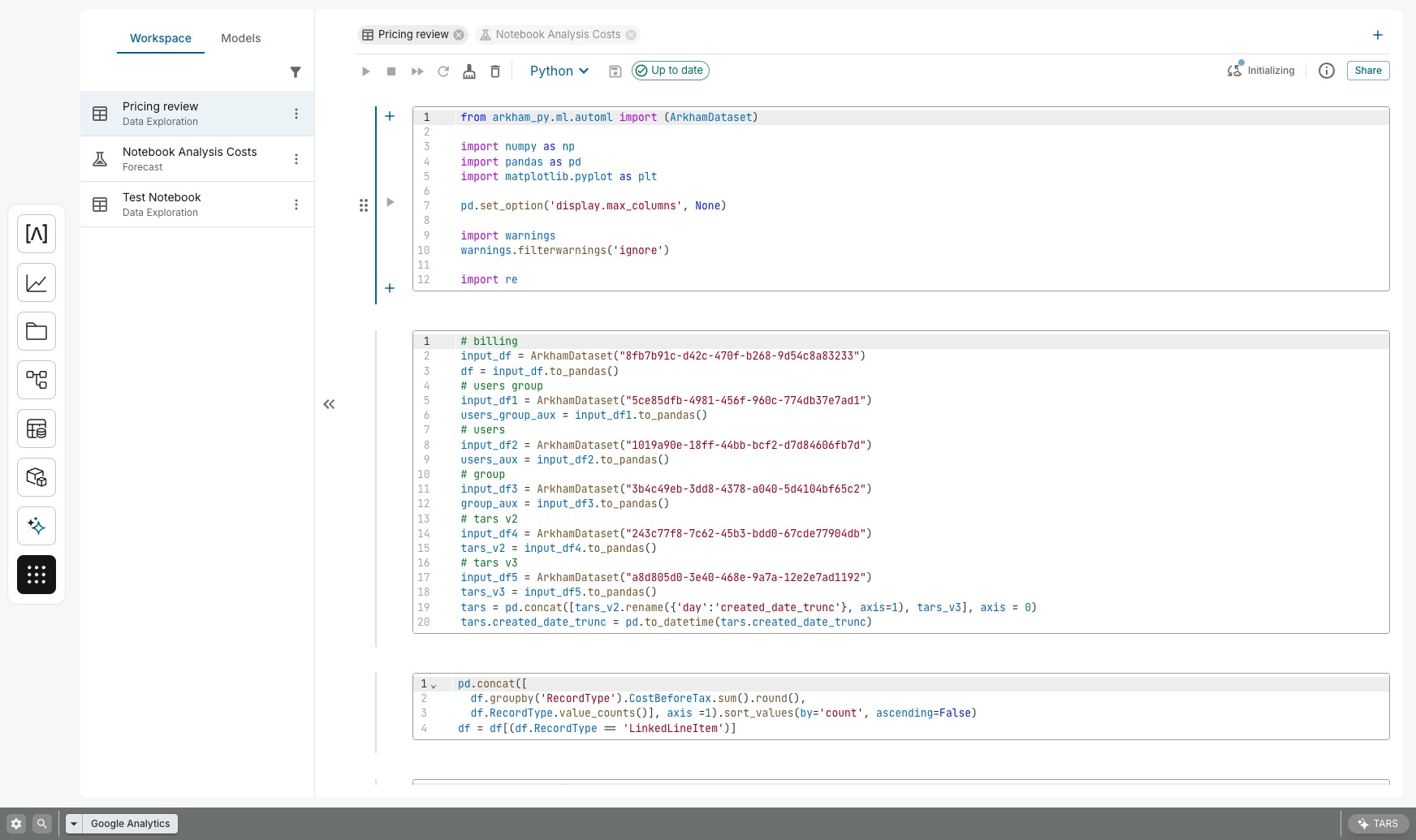

Arkham's ML Hub is engineered to eliminate this friction. It provides a powerful, Python-based notebook interface that is deeply integrated with the rest of the Arkham platform. Here, you can move seamlessly from data preparation to deployment within a single, governed environment, using either custom code or our accelerated AutoML frameworks.

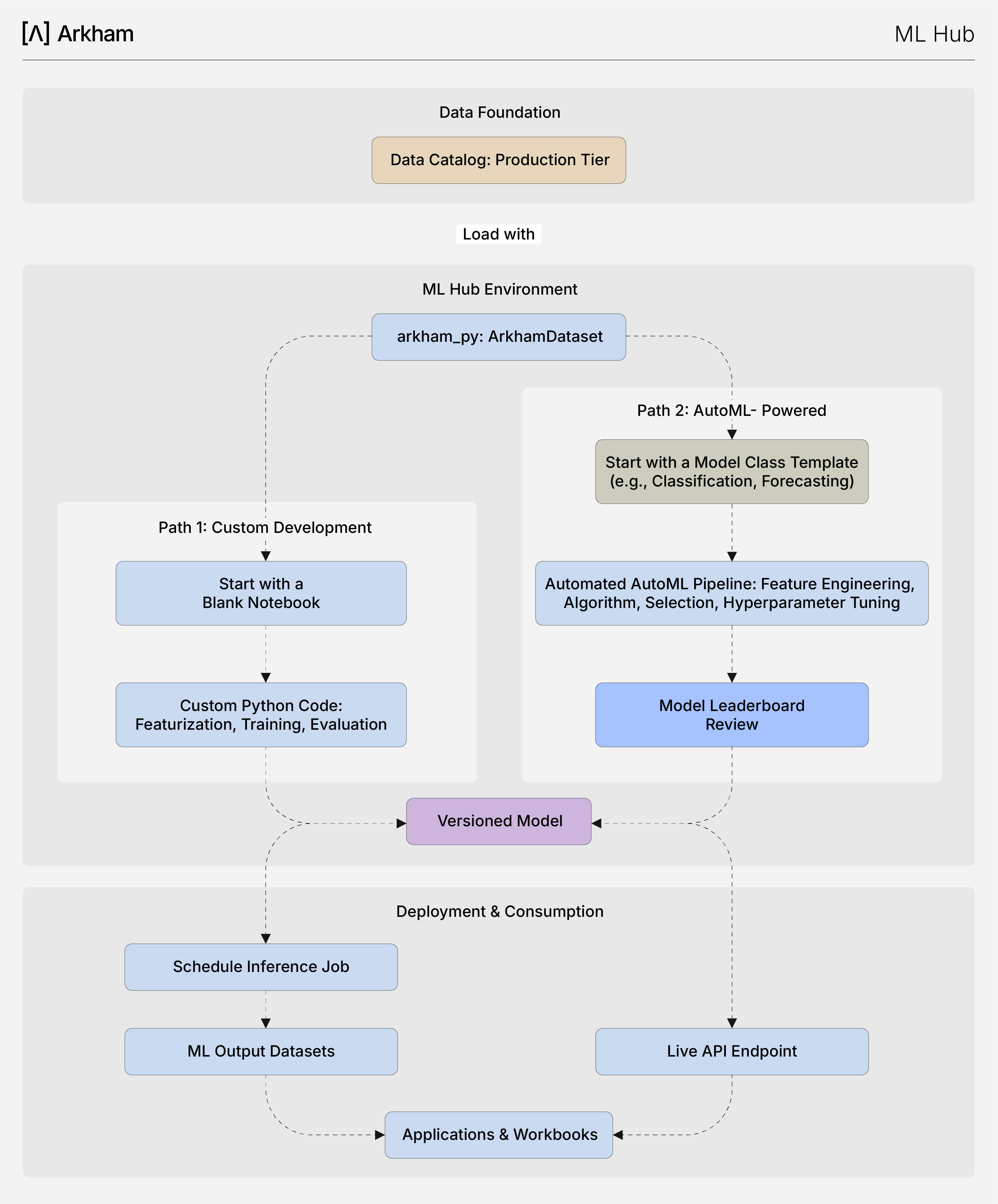

The ML Hub Workflow: From Data to Deployed Model

The ML Hub is designed to support the end-to-end machine learning lifecycle within a single, governed environment. The diagram below illustrates the two primary workflows.

The core of the framework combines the `Model Class` library with the automated training pipeline. This process is designed for speed and efficiency:

- Select a Model Class: Choose from a curated library of over 10 pre-built, production-grade templates for common use cases (e.g., forecasting, classification). These are "glass-box" templates, not black boxes.

- Configure in Code: Using `ArkhamPy`, you connect your dataset from the Lakehouse and configure the model's parameters.

- Launch the AutoML Pipeline: Trigger the automated pipeline, which handles the most complex parts of the ML lifecycle: data preprocessing, feature engineering, algorithm selection, and hyperparameter tuning.

- Review and Deploy: The pipeline produces a leaderboard of the best-performing models. You can review their metrics, inspect their configurations, and deploy the chosen model to the Model Registry with a single command.

How It Improves Your ML Lifecycle

This integrated, code-first approach provides several key advantages for technical builders:

- Accelerated Baselines: Generate high-performing baseline models in hours, not weeks. This immediately provides value and sets a clear performance benchmark for any further custom tuning or research.

- Enforces Best Practices: Model Classes encapsulate proven architectures, preventing teams from "reinventing the wheel." This ensures all projects start from a robust, scalable, and governable foundation.

- Unified MLOps: Whether a model is the direct output of the AutoML pipeline or a heavily customized solution, it is registered, versioned, deployed, and monitored through the same unified MLOps infrastructure, ensuring consistency and reliability from experimentation to production.

- Maximum Flexibility: You are never locked into a restrictive UI. The `ArkhamPy` SDK allows you to extend, override, or build completely bespoke solutions when a Model Class doesn't fit your needs.